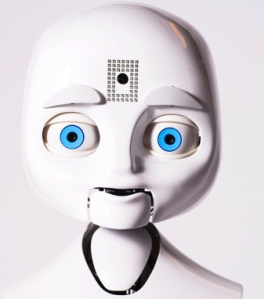

It’s not a typo, it definitely is ‘affective ‘ computing. Rosalind Picard runs a research group at the MIT Media Lab that looks into ways in which computers can interpret and respond to human emotions. She visited the Faraday Institute this week to give a lecture on ‘Playing God? Towards machines that deny their maker’ (watch/listen online). Besides describing some fun and no doubt very useful new technology, such as a sociable robot called Kismet, there was plenty of food for thought.

‘ computing. Rosalind Picard runs a research group at the MIT Media Lab that looks into ways in which computers can interpret and respond to human emotions. She visited the Faraday Institute this week to give a lecture on ‘Playing God? Towards machines that deny their maker’ (watch/listen online). Besides describing some fun and no doubt very useful new technology, such as a sociable robot called Kismet, there was plenty of food for thought.

What I find exciting about Rosalind Picard’s work is that, on top of as her natural fascination at what can be done at an engineering level, she has really thought about the most positive uses of this technology.

One of the main applications of Rosalind Picard’s work in affective computing is for people on the autism spectrum. She has worked directly with people diagnosed with autism to develop systems that help them to interpret and respond to emotion. For example, they have developed some incredibly sophisticated technology that reads facial expressions and tells the user what they mean. This is an very complicated skill that most people develop intuitively. Think about how many different meanings a smile can have: I like you, I’m pleased to meet you, I’m surprised, I’m shy, I’m embarrassed, and so on. Ros discovered that the easiest way to teach the computers to analyse facial expressions was to ask the individuals with autism themselves – as they have learned this skill the the hard, non-intuitive way.

In her interview for the Test of Faith book Ros also described how they tried to anticipate how this technology could be used against people and to build in features that stop that. For example, if someone is wearing a sensor that indicates their stress levels, they should have control over it so that people cannot manipulate them in any way.

You could look at the output of places like MIT and focus on scifi-like scenarios of robots taking over the world, but this really isn’t the reality…